Last week, DeepLearning.AI launched a short course called LangChain: Chat With Your Data, by LangChain creator, Harrison Chase.

I highly recommend this excellent one-hour course to anyone interested in using LangChain to build their own chatbot.

In this article, we will follow the steps needed to quickly build an ESG chatbot of our own. We will use the Global Sustainable Fund Flows Q1 document from MorningStar, which can be downloaded here.

Along the way, we will highlight some key concepts highlighted in the diagram below sourced from the LangChain course. Let’s begin!

Document Loading

The first step of setting up a chatbot will be loading the dataset that we will be retrieving information from. In our example, we are using the Morningstar fund flows data, which we have saved as a pdf in the same folder.

The steps here are relatively straightforward as long as you have the right libraries installed1. Maybe the one thing worth highlighting in this step is that you need to save your API key in a dotenv file. Detailed instructions can be found here if you are not too familiar with this process. As always, keep your API key secret and do not upload it to a public depository or share it with others.

We can see that our document has 35 pages, which matches the PDF.

Document Splitting

The purpose of document splitting is to break down our original 35-page document into smaller chunks of texts for processing later.

There are a few methods that are covered here but for our purpose we will go with the Recursive Character Text Splitter.

Embeddings + Vector Stores

Embeddings is an important step in this process. In simple terms, embeddings extract values from text-based sentences by converting them from strings to vectors, so that scores can be calculated and the similarity between different sentences can be gauged.

We will use OpenAI’s Embeddings here as the default embedding. After this step, we will store the vector values of our texts into a vector database. For this step, we will be using a database called Chroma.

One thing worth highlighting here is that if you are trying this at home, you probably need to upgrade to a paid OpenAI account to run the embedding and the storing into the vector database, or else you will have issues with the quota limit.

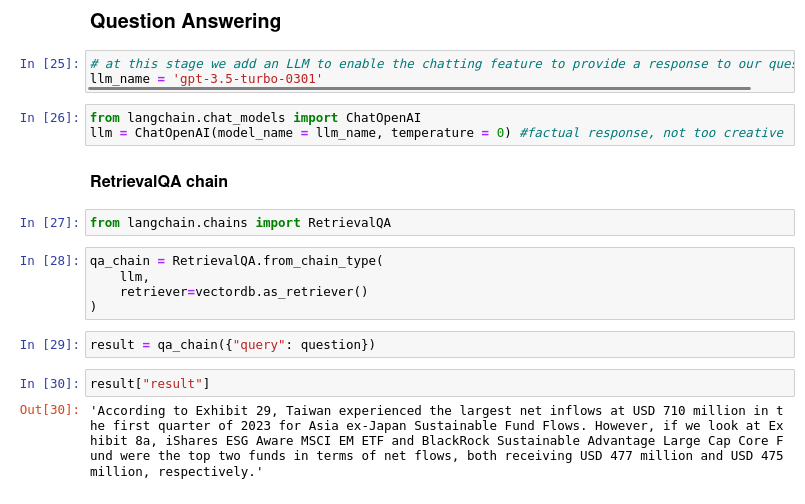

Question Answering

If we just query directly from the vector database, we will be able to retrieve sentences that can answer our questions but this is not exactly chatbot-like.

Think of this more like an advanced Ctrl + F.

In the next stage, we add a Large Language Model (we use GPT-3.5-turbo-0301 version) to enable the chatting feature. You can think of this as what we usually see when we use ChatGPT.

In the second row below, we set temperature to zero for a more factual and less creative response.

Adding a Prompt

In the last line of output above, we see the response now resembles a chatbot instead of just giving us a paragraph like what we saw earlier.

In this section, we can add a prompt to improve the result. A prompt is like a priming tool that helps to focus the search and improve the answer.

In our prompt, we ask the chatbot to not make up an answer, use three sentences maximum to keep the response concise. We also request the chatbot to say “thanks for going green!” at the end of each answer:

from langchain.prompts import PromptTemplate

# Build prompt

template = """Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer. Use three sentences maximum. Keep the answer as concise as possible. Always say "thanks for going green!" at the end of the answer.

{context}

Question: {question}

Helpful Answer:"""Adding chat history

After adding the prompt, we should also allow the chatbot to have some “memory” so that we can ask follow-up question.

This is done through the following:

There we go! You now have your very own ESG chatbot telling you about sustainable fund flows.

You can also add a pretty user interface and even a document upload function to complete the chatbot, but at this stage I think we have covered the key steps.

With more and more ESG documents ranging from data methodologies to sustainability standards, the biggest use for a chatbot like this will be to help us get useful insights without having to parse through hundreds of pages.

As usual, you can access the Github notebook here if you want to follow through the steps.

The essential libraries are openai, python-dotenv, langchain, tiktoken, pypdf and chromadb.